The Hansen Fit Score Framework: From Ecosystem Diagnosis to Measurable Success

The IBM-Anthropic Announcement That Changed Everything

The industry has long struggled with AI implementation failure rates consistently reported at 80-95% across multiple studies. When IBM and Anthropic announced their partnership last week, they highlighted this challenge, noting fewer than 5% of enterprises are seeing measurable ROI.

That’s not a capability problem. It’s not a technology problem. It’s a readiness problem—and more specifically, it’s an ecosystem alignment problem that no one else is measuring.

After spending the last week analyzing this announcement through the Hansen Fit Score (HFS) lens, mapping it against the Coupa-Scoutbee acquisition, and scoring dozens of practitioners, providers, analysts, and consultancies across all three HFS dimensions, I can now explain—mathematically—why the failure rate is so predictable, and more importantly, how to beat it.

The Fundamental Insight: Success is Multiplicative, Not Additive

Here’s what the industry gets wrong: procurement technology success is treated as a vendor selection problem when it’s actually an ecosystem co-production challenge.

But before we dive into the solution, we need to understand how the Hansen Fit Score framework reconciles with—and explains—the failure rates we already know.

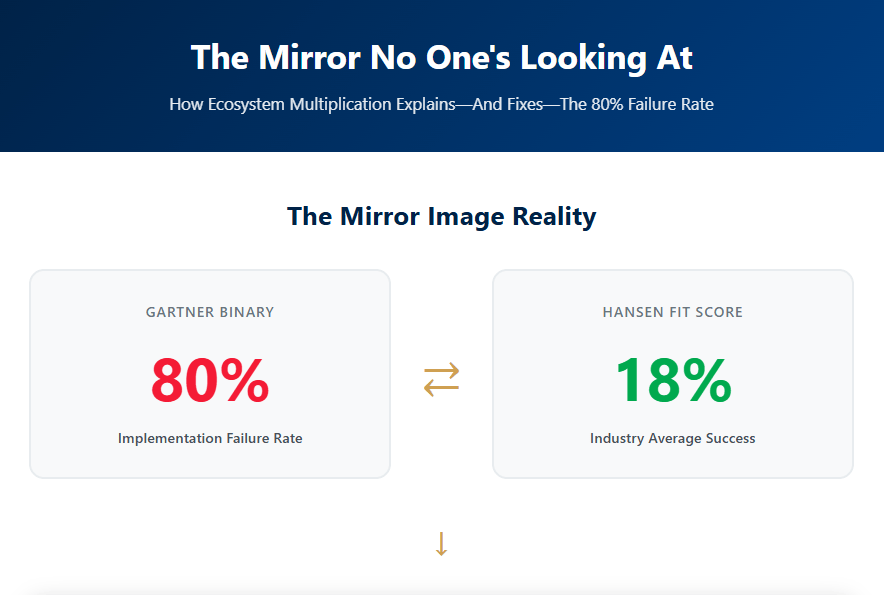

The Mirror Image: How HFS Validates Industry Data

Gartner reports an 80% implementation failure rate. That’s a binary measure: did the project succeed or fail?

The Hansen Fit Score measures success probability from the opposite angle. When we assess typical ecosystem configurations (practitioners, providers, analysts, consultants averaging 6.5-7.0 HFS), we calculate an 18% success probability.

These aren’t contradictory—they’re mirror images of the same reality:

- Gartner: 80% fail / 20% succeed (failure-focused)

- HFS Industry Average: 18% succeed / 82% fail (success-focused)

The numbers align almost perfectly. The HFS framework explains WHY Gartner sees what they see.

The Two-Layer Success Model

But there’s a critical distinction between implementation success and ROI realization:

Layer 1: Implementation Success

- Industry average: 18-20% succeed at deployment and adoption

- This is what Gartner measures

Layer 2: ROI Realization

- Of those 18-20% that implement successfully, only ~28% achieve measurable, sustained financial ROI

- 18% × 28% ≈ 5% ← This explains IBM’s observation

The complete picture: When typical ecosystems attempt ProcureTech AI implementations, only 18% succeed at implementation, and of those, only 28% generate measurable ROI. Net result: ~5% total ROI realization—exactly what IBM and Anthropic cited as the industry challenge.

How Hansen Framework Changes the Math

When you optimize your ecosystem using HFS principles:

Implementation Success Improves:

- From 18% to 37% (optimized ecosystem with all stakeholders ≥7.5 HFS)

- Gartner equivalent: Failure rate drops from 80% to 63%

- Result: Implementation success nearly doubles

ROI Conversion Improves:

- From 28% to ~40% (because readiness-gated implementations with strong governance sustain better)

Total ROI Realization:

- Industry: 18% × 28% ≈ 5%

- Hansen-Optimized: 37% × 40% ≈ 15%

- Result: ROI realization triples

This isn’t theoretical—it’s mathematical. The Hansen Fit Score framework doesn’t just measure the problem; it architects the solution.

Why This Matters

Your implementation success isn’t determined by picking the “best” provider. It’s determined by the multiplicative interaction of four stakeholder readiness scores:

Success Rate = (Practitioner HFS) × (Provider HFS) × (Analyst HFS) × (Consultant HFS)

This isn’t a metaphor. It’s mathematics.

When Lundbeck (7.9 HFS) partners with Focal Point (8.3), Hackett Group (7.6), and KPMG (7.4), the composite success probability is:

7.9 × 8.3 × 7.6 × 7.4 = 3,673 / 10,000 = ~37%

That’s the best-case scenario in today’s market—and even then, only 1 in 3 implementations succeed.

But when LCBO (6.8) partners with TealBook (5.5), Spend Matters (6.8), and Deloitte (7.0), the math becomes brutal:

6.8 × 5.5 × 6.8 × 7.0 = 1,785 / 10,000 = ~18%

That’s the industry average—which perfectly explains the 80% failure rate we see in practice.

The Three-Dimensional Assessment That Reveals Everything

The Hansen Fit Score evaluates every stakeholder—practitioners, providers, analysts, and consultants—across three critical dimensions:

1. Tech Capability (0-10)

Can the technology actually deliver what it promises? This includes:

- Feature completeness and integration depth

- AI/ML precision, recall, and model governance

- MCP compatibility and canonical rails support

- Data quality and lineage auditability

2. Behavioral Alignment (0-10)

Does the solution reinforce or fight against how people actually work? This measures:

- Human-in-the-loop workflows vs. autonomous black boxes

- Exception handling and policy enforcement

- Change management methodology

- Adoption metrics and user experience

3. Readiness Compensator (0-10)

What minimum organizational maturity is required for success? This assesses:

- Governance frameworks and audit trails

- Canonical rails existence and quality

- Cross-functional alignment capacity

- Process documentation and change readiness

The composite HFS is the average of these three scores—but the ecosystem success rate is their product across all stakeholders.

The Shocking Reality: What the Scores Reveal

After conducting comprehensive 3D HFS assessments across the procurement ecosystem, here’s what emerged:

ProcureTech Providers (The Good News)

Three providers earned the HFS “shortlist” with composite scores of 8.3/10:

- ConvergentIS: Best Metaprise orchestration (9.0), SAP-native strength

- Focal Point: Highest behavioral alignment (9.2), practitioner-built governance

- AdaptOne: Strongest strand commonality (9.1), audit-ready compliance

All three meet the ≥7.5 readiness threshold required for ecosystem success.

Analyst Firms (The Problem Child)

The analyst world shows troubling gaps:

- Hackett Group (7.6): Best of breed due to benchmarking rigor and process awareness

- Spend Matters (6.8): Tech coverage strong but feature-focused over readiness

- Gartner (6.7): Vendor-pay model severely impacts behavioral alignment (5.8)

Critical insight: Even the best analyst scores are below the practitioner readiness threshold, indicating systemic misdirection in evaluation criteria.

Consultancies (The Capability-Without-Proof Trap)

All major consultancies cluster around 7.0-7.4:

- KPMG (7.4): Strongest governance capability (8.2) due to audit heritage

- Accenture (7.0): Tech-first posture with weak behavioral alignment

- Deloitte (7.0): Chatbot failure validates readiness gaps despite strong credentials

Key finding: Brand prestige ≠ readiness alignment. None demonstrates true readiness-first implementation methodology.

Practitioners (The Wide Variance)

Organizations range from 6.8 to 7.9 composite HFS:

- Lundbeck & Novartis (7.9): Pharma regulatory excellence translates to procurement governance

- Peak Utilities (7.3): Strong behavioral discipline, moderate tech maturity

- LCBO (6.8): Public sector constraints limit capability despite operational rigor

Sobering reality: Even the highest-scoring practitioners only achieve ~37% success rates when paired with best-in-class ecosystem partners.

Why One Weak Link Destroys Everything

This is where the multiplicative nature becomes devastating.

Consider the “Magic Quadrant Trap”—when Novartis (7.9) partners with Coupa-Scoutbee (7.0 after AI issues), Gartner (6.7), and Accenture (7.0):

7.9 × 7.0 × 6.7 × 7.0 = ~26% success rate

Even though the practitioner is excellent and the other stakeholders are “good enough,” Gartner’s low behavioral alignment score (5.8—driven by vendor-pay model conflicts) acts as a multiplier that drags down the entire ecosystem by 11 percentage points compared to using the Hackett Group.

A single stakeholder scoring 5.5 (like TealBook’s behavioral alignment) can reduce success probability by 20% or more, even when every other stakeholder is strong.

This is why “good enough” thinking is fatal in ProcureTech AI implementations.

The Hansen Strategy Framework: How to Beat the Odds

After analyzing these interdependencies, I developed a four-phase practitioner strategy that can improve success rates from 18% (industry average) to 37%+ (optimized ecosystem).

Phase 0: Readiness Gate (BEFORE Investigation)

Mandatory prerequisite: Achieve ≥7.5 HFS across all three dimensions before evaluating ANY vendors.

Why this matters: At 6.8 HFS, even perfect partners yield only 25% success. At 7.9 HFS, you unlock 37%. Your readiness is the foundation—invest here first.

Key actions:

- Build canonical rails: standardized data pathways across procurement, finance, IT/security, and legal

- Establish governance checkpoints: who approves what, when, and based on what criteria

- Secure executive sponsorship with defined success metrics

- Document process maturity and create change management capacity

Exit criteria:

- Canonical rails are operational for at least one workflow

- Self-assessed HFS ≥7.5 with evidence

- Change capacity proven through a successful pilot (any initiative)

Phase 1: Investigate (Ecosystem-First Evaluation)

Core principle: Evaluate providers, analysts, and consultants AS AN ECOSYSTEM, not independent purchases.

Critical questions:

- Provider: Can they produce precision/recall benchmarks? How do they map to YOUR canonical rails? What’s their behavioral alignment methodology?

- Analyst: Do they measure readiness and behavioral dimensions, or just features? What’s their independence score?

- Consultant: What’s their governance capability? Do they walk away if readiness isn’t there?

Red flags (walk away immediately):

- Provider cannot produce dedupe rates or lineage documentation

- Analyst evaluation ignores behavioral alignment dimensions

- Consultant promises “plug-and-play” without readiness assessment

- Any stakeholder resists HFS scoring or transparency

Exit criteria:

- Provider HFS ≥8.0, Analyst ≥7.5, Consultant ≥7.3

- Ecosystem composite success probability ≥30%

- Canonical rails compatibility verified

Phase 2: Select (Optimize for Multiplication)

Selection formula: Your Success = (Your HFS) × (Provider) × (Analyst) × (Consultant)

Optimal configurations:

- Behavioral Alignment Priority: Your HFS (7.9) × Focal Point (8.3) × Hackett (7.6) × KPMG (7.4) = 37%

- Enterprise Orchestration: Your HFS (7.9) × ConvergentIS (8.3) × Hackett (7.6) × KPMG (7.4) = 37%

- Compliance-Driven: Your HFS (7.9) × AdaptOne (8.3) × Hackett (7.6) × KPMG (7.4) = 37%

Key insight: All optimal configurations achieve the same success rate—choose based on YOUR rails compatibility and sector requirements, not brand prestige.

Exit criteria:

- Ecosystem composite HFS ≥30%

- All stakeholders ≥7.3 composite

- Contract includes behavioral metrics

- Exit criteria defined BEFORE implementation

Phase 3: Implement (Readiness-Gated Rollout)

Implementation doctrine: Phase-gate with evidence-based progression. Never proceed to the next phase without measuring behavioral adoption and exception rates.

Pilot Phase (60-90 days):

- Scope: 3-5 use cases on established canonical rails

- Measure: Cycle time reduction, right-first-time rate, duplicate suppression, exception handling

- Exit criteria: ≥30% cycle time reduction, <2% duplicates, ≤5% exceptions

Expansion Phase (Quarters 2-3):

- Scope: 10-15 use cases; expand to adjacent functions

- Measure: Adoption rate by persona, strand interoperability, governance compliance

- Exit criteria: ≥70% adoption, <3% misalignment, clean audit

Scale Phase (Quarter 4+):

- Scope: Enterprise-wide deployment

- Measure: Sustained performance, cost per transaction, supplier satisfaction

- Exit criteria: ≥85% adoption, sustained gains, zero major breaches

Critical rule: If pilot fails exit criteria, STOP. Fix behavioral/readiness gaps before scaling. Speed kills—readiness scales.

Phase 4: Ongoing Success (Sustain & Evolve)

Continuous readiness principle: HFS scores decay without active maintenance. Quarterly re-assessment is mandatory, not optional.

Key activities:

- Quarterly HFS re-assessment for all stakeholders

- Exception analysis: What patterns repeat? (signals rail gaps or behavioral misalignment)

- Behavioral reinforcement: Super-user communities, continuous training, feedback loops

- Performance monitoring: <3% exceptions, ≥85% adoption, <2% duplicates (sustained)

Red flags requiring intervention:

- Any stakeholder HFS drops below 7.0

- Exception rate trending upward

- Adoption rate declining

- Provider stops publishing metrics

The Procurement Insights Differentiator

No other framework explains WHY 95% of AI implementations fail while simultaneously showing HOW to fix it.

The Hansen Fit Score isn’t just a measurement—it’s the roadmap to 2-3x success rate improvement by elevating all stakeholders to the ≥8.0 threshold.

This is why practitioners, providers, analysts, AND consultants all need HFS scores. Success is co-produced, and the math proves it.

When IBM and Anthropic talk about addressing the “95% failure rate,” they’re describing the symptom. The Hansen Fit Score framework diagnoses the root cause—ecosystem readiness misalignment—and provides the operational cure.

The Path Forward: From 18% to 37% (and Beyond)

The industry average success rate is 18%. That’s not acceptable.

By following the Hansen Strategy Framework:

- Build YOUR readiness to ≥7.5 HFS before selecting vendors

- Architect ecosystem partnerships for multiplicative success (≥30% probability)

- Implement with phase-gated discipline and evidence-based progression

- Sustain through quarterly re-assessment and continuous readiness

You can achieve 37%+ success rates—more than double the industry average.

And if the industry elevates all stakeholders to ≥9.0 HFS (strong across tech, behavioral, and readiness), we unlock 66% success rates. That’s not hype. That’s the mathematical reality of what happens when readiness, governance, and canonical rails become the standard rather than the exception.

The Bottom Line

The 95% AI failure rate isn’t a capability problem. It’s a readiness alignment problem across the entire ecosystem.

The difference between the 18% who succeed by accident and the 37% who succeed by design is simple: they measured what actually matters.

Tech capability alone won’t save you. Behavioral alignment alone won’t save you. Readiness alone won’t save you.

But tech capability × behavioral alignment × readiness—measured, proven, and sustained across every stakeholder in your ecosystem—that’s the formula for success.

And now, for the first time, you have the framework to make it happen.

Take Action

Ready to assess your ecosystem readiness?

Start with Phase 0: Conduct an honest 3D HFS self-evaluation. Don’t wait for the perfect tool—build your canonical rails first.

Want to dive deeper?

Download the full Hansen Fit Score Practitioner Strategy Framework, including:

- Phase-by-phase implementation checklists

- HFS self-assessment templates

- Vendor evaluation scorecards

- Quarterly re-assessment protocols

Have questions about your specific situation?

The Procurement Insights community is here to help practitioners navigate the readiness journey. Share your experiences, challenges, and wins—together, we’re building the institutional knowledge that transforms the 95% failure rate into the exception rather than the rule.

Jon W. Hansen is the creator of the Hansen Method and the Hansen Fit Score framework. His research on Strand Commonality Theory received Scientific Research & Experimental Development (SR&ED) funding from the Government of Canada in 1998, leading to the creation of an agent-based Metaprise platform for the Department of National Defence. Today, his frameworks provide the only methodology that measures ecosystem readiness alignment across procurement technology implementations.

#HansenFitScore #CanonicalRails #ReadinessBeforeTechnology #ProcurementTransformation #AIinProcurement #ProcureTech

What’s your organization’s HFS? Share your self-assessment results, and let’s discuss how to architect your success.

30

BONUS COVERAGE – THE MIRROR

Tahj Bomar

October 9, 2025

Phase 0 is crucial.So often companies put it all