Microsoft didn’t lie with the name — they just set the wrong expectation. The gap between assist and partnership is where most AI strategy turns into implementation disappointment.

The Naming Irony

When Microsoft launched “Copilot,” the name suggested something revolutionary:

- A partner, not a tool

- Collaboration, not command

- Shared flight, not solo operation

The reality is more nuanced: Copilot is excellent assistive automation — autopilot for tasks, not co-pilot for outcomes.

That distinction matters more than most organizations realize.

What Microsoft Built vs. What Enterprises Need

Microsoft didn’t lie with the name — they just set the wrong expectation.

Copilot is excellent assistive automation: it helps you draft, summarize, search, and execute micro-tasks faster. It can retrieve context through tenant data, files, chats, and Graph.

But it isn’t a co-pilot in the sense enterprises need for outcomes: a persistent engagement loop where intent, memory, and guardrails are co-managed over time.

Retrieval of context is not the same as continuity.

Suggestions are not the same as governance.

That gap — between assist and partnership — is where most “AI strategy” turns into implementation disappointment.

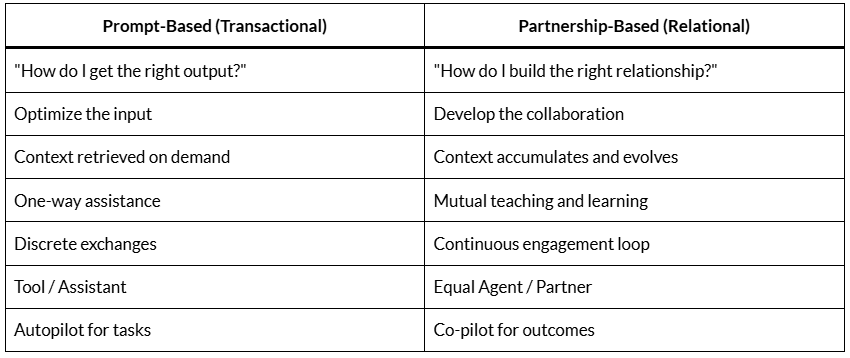

The Three Models of Human-AI Engagement

To understand why this matters, consider the three models I’ve been documenting:

Autopilot (1998 Model)

- Human sets the rules

- AI executes within parameters

- Static guardrails

- “I set, you run”

- Alleviates cognitive load on specific tasks

- Useful for micro-tasks, not outcomes

Replacement (2026 Fear)

- AI sets the rules

- Human reacts or is displaced

- No guardrails

- Surrender of control

- The nightmare scenario everyone fears

Co-pilot (True Collaboration)

- Human and AI co-develop the rules

- Continuous engagement loop

- Dynamic, refreshed guardrails

- Both teach and learn simultaneously

- Context sustains and evolves over time

- Relationship, not transaction

Microsoft named their product after the third model but built a sophisticated version of the first.

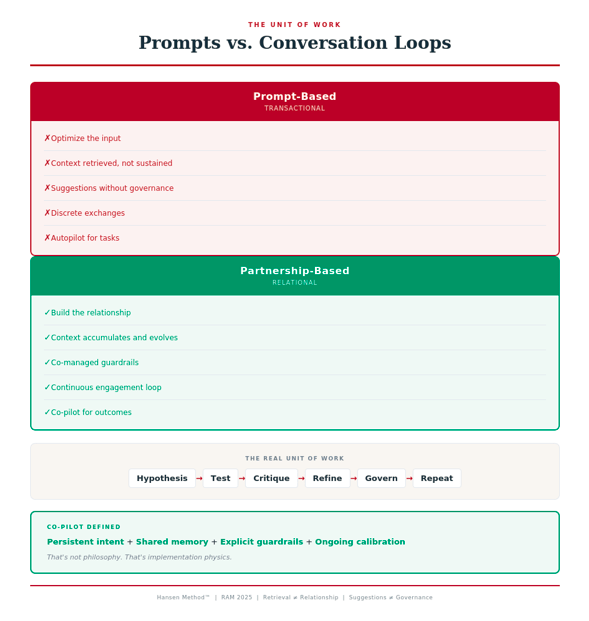

Defining Co-pilot in Operational Terms

The word “co-pilot” gets used loosely. Here’s what it actually means in implementation terms:

Co-pilot = persistent intent + shared memory + explicit guardrails + ongoing calibration.

That’s not philosophy. That’s implementation physics.

A true co-pilot:

- Sustains persistent intent — understands not just what you asked, but what you’re trying to achieve over time

- Builds shared memory — context accumulates across sessions, weeks, months

- Maintains explicit guardrails — governance that’s co-developed and refreshed, not static

- Enables ongoing calibration — both human and AI catch errors, challenge assumptions, refine together

This isn’t a product feature. It’s a relationship.

Why This Distinction Matters

The 80% AI implementation failure rate persists because organizations are buying assistive automation and expecting a collaborative partnership.

When you deploy Microsoft Copilot — or any prompt-driven AI assistant — you’re getting:

- A tool that responds to requests

- Context retrieval without sustained continuity

- Suggestions without co-managed governance

- Assistance without mutual learning

You’re getting sophisticated task automation. Which is valuable. But it’s not what the word “co-pilot” promises to most ears.

The Prompt Trap

The industry is teaching “prompt engineering” as the path to AI success. But this approach has a built-in ceiling.

Prompt engineering assumes:

- The quality of output depends on the quality of the input in that moment

- Each interaction is a discrete transaction

- Success means crafting better questions

The problem isn’t that prompts exist — everything involves some form of input. The problem is treating prompts as the unit of work.

The real unit of work is the conversation loop:

Hypothesis → Test → Critique → Refine → Govern → Repeat

When prompts are your operating model, you’re optimizing transactions. When conversation loops are your operating model, you’re building relationships.

Stop treating prompts as the unit of work. Start treating the conversation loop as the unit of value.

From Transactions to Relationships

The Real Question

When organizations deploy AI, they need to ask:

“Are we building a relationship — or just buying a tool?”

If you’re buying a tool, call it what it is: assistive automation. An advanced assistant. Autopilot for tasks.

But don’t call it Copilot and expect the outcomes that only come from true collaboration.

The Implementation Physics

Here’s what separates organizations that succeed with AI from those that don’t:

Organizations that fail:

- Buy assistive automation expecting partnership

- Treat prompts as the operating model

- Deploy before aligning governance, decision rights, and workflows

- Discover the gap between assist and partnership after implementation

Organizations that succeed:

- Understand the difference between task automation and outcome collaboration

- Build conversation loops, not prompt libraries

- Assess readiness before deployment

- Co-develop guardrails through continuous engagement

The technology is ready for co-pilot engagement. The question is whether organizations are ready to stop buying autopilot and calling it partnership.

The Bottom Line

Microsoft Copilot is excellent at what it actually is: assistive automation that helps you draft, summarize, search, and execute micro-tasks faster.

It’s autopilot for tasks. And that’s valuable.

But it isn’t co-pilot for outcomes — not yet, and not without a fundamental shift in how organizations engage with it.

Co-pilot = persistent intent + shared memory + explicit guardrails + ongoing calibration.

That’s not a product feature. That’s a relationship.

And relationships — not transactions — are what produce outcomes.

Are you buying autopilot for tasks and expecting co-pilot for outcomes? That gap is where implementation disappointment lives.

Related Posts:

- Autopilot vs. Co-pilot: Why the Ecosystem Is Still Stuck in 1998

- The Silent Golden Majority: Why the 20% Who Succeed Never Talk

- Know Your Odds: Why Practitioners Would Have Better Luck at the Casino

rccram

January 6, 2026

Another great, insightful article, Jon. Co-Pilot is a tremendously useful tool, but it requires much skill in framing the right questions or series of questions and a critical assessment of the answers one gets. I have found it very useful, but have also found, when testing what it can do, that the data it uses can be deficient. In July last year (for curiosity) I tried to find out the highest temperature recorded in Greenland so far in 2025. I was provided with an answer to another question , so kept re-phrasing the question and kept on being provided with answers that I knew were incorrect, Eventually Co-Pilot informed me that the latest data it was able to access was the end of 2023. One therefore has to use CoPilot intelligently and critically. Any supply chain chain manager who fails to heed its limitations could screw up their company’s supply chains. Any executive who feels that Co-Pilot and similar products enable them to reduce the number of supply chain staff may have a nasty shock.

piblogger

January 6, 2026

Colin Cram — exactly right. “Intelligently and critically” is the key.

What you’re describing — framing questions, re-phrasing, validating outputs — is skilled use of an assistant. And that’s valuable.

But it’s still transactional: question → answer → evaluate → repeat.

The shift I’m pointing to is when context accumulates over time — when you don’t have to re-phrase because the AI already understands what you’re trying to achieve. When the relationship compounds rather than resets.

That’s rare with Copilot today. Not because the technology can’t do it, but because the engagement model is still prompt-driven.

Your warning is the right one: any executive who thinks Copilot replaces judgment is heading for a nasty shock. The tool amplifies capability — it doesn’t substitute for it.

And as you note — data limitations are real. The AI is only as good as what it can access and what the human brings to the collaboration.

Appreciate you adding the practitioner perspective.